Debugging a Robot In Simulation Before You Burn Wires

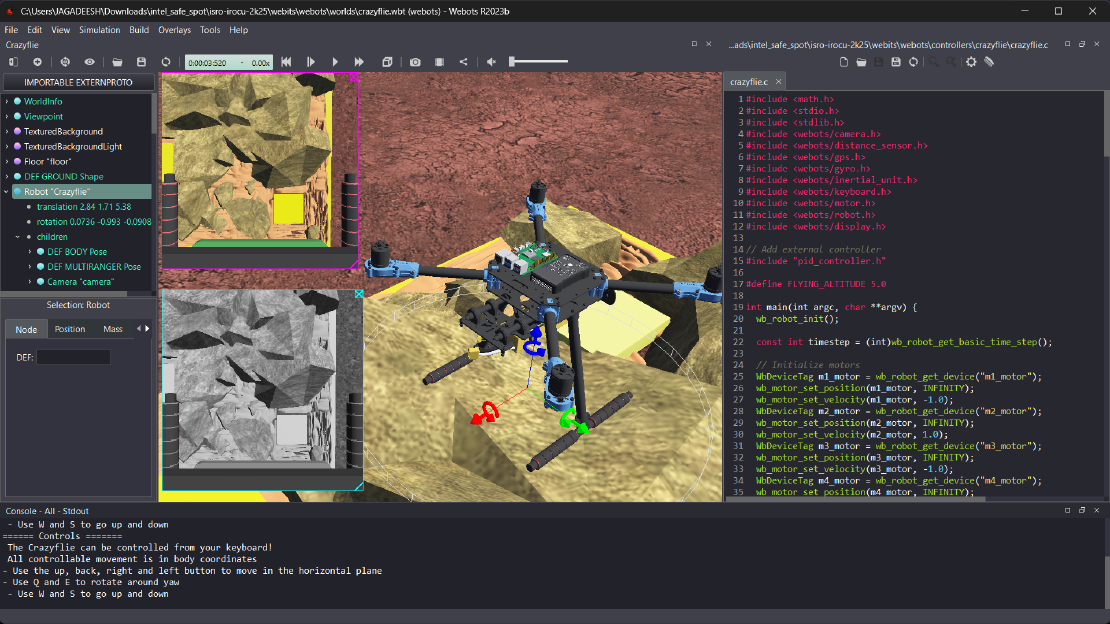

Hardware does not come with an undo button. Once you power it on, mistakes—from reversed wiring to faulty code—can result in costly damage. Motors may overheat, printed circuit boards (PCBs) can be fried, and sensors may break. These issues turn exciting projects into frustrating repair sessions. The autonomous drone shown above, designed for GNSS-denied environments in webots as part of the ISRO Robotics Challenge, is a perfect example—where careful planning, testing, and hardware safety were critical at every step

Building a robot requires a fusion of mechanical engineering, electronics, and software development. Each component adds complexity and risk. Even carefully assembled hardware can fail due to unexpected interactions, timing errors, or unforeseen edge cases. For this reason, simulation has become indispensable in modern robotics development. It offers a safe, controlled environment for troubleshooting, iteration, and validation before risking physical damage.

It’s often said that simulations don’t match real-world behavior. In many cases, that belief comes from experience with the wrong tools—or not knowing how to use the right ones to their full potential. When applied thoughtfully, simulation is one of the most valuable steps in the engineering process. It doesn’t aim to replace physical testing, but it helps you explore possibilities, spot early failures, and make more informed decisions before anything is built.

Simulations won’t capture every detail. Real-world factors like friction, wear, or long-term fatigue are complex. But even rough estimates can be extremely helpful. For example, you might not know the exact point a part will wear out, but simulation can still help you figure out if that’s likely to happen in 500 cycles or 10,000—enough to guide better choices early on.

Consider a car suspension system. Simulating how it behaves under different loads or road conditions won’t cover every variable, but it will give insight into stress points, spring performance, and damping behavior. That saves time, reduces rework, and makes later testing more focused and meaningful.

The same applies across engineering—from robots and drones to embedded systems and mechanical linkages. Simulation helps reduce guesswork, not eliminate all uncertainty.

With that in mind, let’s now focus on simulation tools designed specifically for robotics, where virtual environments allow safe, iterative development of autonomous systems using physics-based testing and sensor feedback.

Why Simulation Should Be Your First Step

Testing robotics systems on physical hardware can be slow, risky, and expensive. Running a new control algorithm directly on a robot means that every bug risks damaging motors, servos, or electronics. Additionally, resetting or fixing hardware after a failure is often time-consuming.

Simulation provides a virtual workspace where you can freely test your ideas. It lets you push your robot’s limits without concern for physical harm. For instance, a line-following robot that veers off in the real world could cause tire wear or crashes. In simulation, the same behavior results in easy-to-reset failure states.

Beyond safety, simulation accelerates development cycles. You can rapidly test variations, try extreme cases, and collect data for analysis—all without hardware setup. This speed allows more design exploration and better performance tuning before physical trials.

Exploring Popular Robotics Simulation Software

Gazebo (Ignition Gazebo)

Gazebo is one of the most widely adopted simulators in robotics, especially for projects built around the Robot Operating System (ROS). Its strengths include:

- Accurate Physics Simulation: Supports rigid body dynamics, collision detection, joint constraints, and sensors like cameras, IMUs, and LIDAR.

- Extensibility: Custom plugins allow developers to add specialized behaviors, sensor models, or controllers.

- Integration with ROS: Facilitates seamless interaction between simulation and ROS nodes, enabling realistic sensor data streaming and control commands.

Gazebo’s physics engine realistically models gravity, friction, and inertia, which is critical when validating mechanical designs and control strategies. For example, you can simulate a robotic arm lifting an object, observing torque requirements and joint limits without risking hardware damage.

Webots

Webots offers a user-friendly environment with a library of pre-built robots and sensors. It is popular in academia and education due to its accessibility and versatility.

- Sensor Simulation: Supports cameras, GPS, LIDAR, and sonar sensors.

- Multi-language Support: Compatible with C, C++, Python, MATLAB, and ROS.

- Rich Visualization: Allows realistic rendering of environments and robots.

Webots is excellent for prototyping robot navigation, sensor fusion, and multi-agent behaviors, such as swarm robotics or cooperative tasks.

CoppeliaSim (formerly V-REP)

CoppeliaSim excels in fast prototyping, multi-robot simulation, and scripting flexibility.

- Embedded Lua Scripting: Enables complex robot logic directly inside the simulation.

- Custom Physics Engines: Supports multiple physics engines like Bullet and ODE.

- Complex Robots: Ideal for testing articulated arms, humanoids, and swarms.

It is particularly useful for robotics researchers testing novel kinematics, grasping algorithms, or coordination strategies.

Unity and Unreal Engine

Game engines like Unity and Unreal offer photorealistic rendering and advanced physics but require more setup.

- Visual Fidelity: Useful for human-robot interaction research or virtual reality applications.

- Physics Customization: Allows integration of custom robot models and environments.

- Cross-Platform: Simulations can be deployed on VR headsets or mobile devices.

These engines are increasingly used to simulate drones, autonomous vehicles, or robots interacting in complex, visually rich environments.

MATLAB and Simulink

These platforms are favored in control system design and embedded systems prototyping.

- Control System Modeling: Easily create and tune PID controllers, state machines, and observers.

- Co-simulation: Interfaces with physical hardware and other simulators.

- Real-Time Testing: Supports Hardware-in-the-Loop (HIL) simulations.

Simulating Mechanical Behavior and Motion Control

Mechanical failures often originate from unexpected or excessive forces on robot components. Inverse kinematics calculations that do not respect joint limits or unexpected acceleration spikes can physically damage servos, gears, or frames.

Simulators allow you to:

- Test mechanical limits by setting maximum joint angles and torque constraints.

- Visualize robot trajectories in 3D, including velocities and accelerations.

- Detect collisions between robot parts or with obstacles.

- Simulate dynamic loads such as inertia, friction, and impact forces.

For example, a six-axis robotic arm performing complex pick-and-place tasks can be analyzed in CoppeliaSim or Gazebo to confirm smooth movements and avoid collisions before being built.

This kind of virtual stress-testing is critical, especially in custom-built or highly integrated systems where hardware replacement costs are high.

Sensor Simulation and Synthetic Data Generation

Sensor data is inherently noisy, affected by environmental conditions, and often unpredictable. Testing sensor-dependent algorithms on real hardware is complicated by fluctuating lighting, temperature changes, and electromagnetic interference.

Simulators provide:

- Synthetic sensor data that mimics real-world noise, resolution, latency, and distortion.

- Emulated GPS signals with configurable drift or dropout.

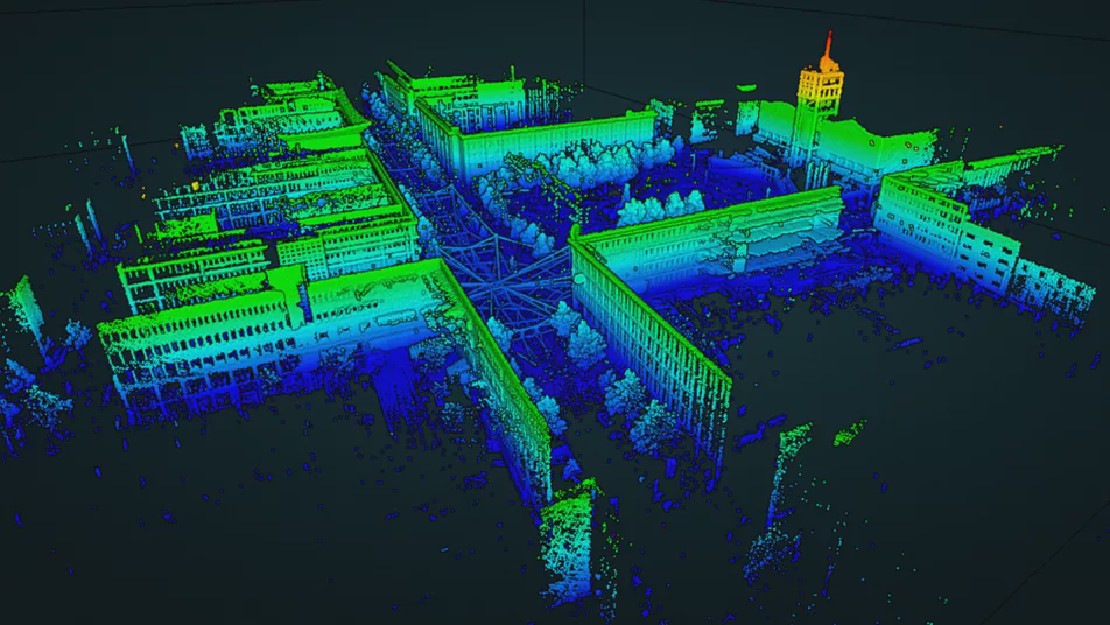

- Simulated LIDAR and sonar scans with realistic point clouds.

- Virtual cameras replicating different resolutions, frame rates, and field of view, supporting early-stage computer vision development.

For example, in Webots or Gazebo, you can simulate a robot navigating a warehouse environment with virtual LIDAR data to develop and validate obstacle avoidance algorithms.

Control Algorithm Tuning and Feedback Loop Testing

Tuning controllers like PID, MPC, or adaptive algorithms directly on hardware can be risky and inefficient. Aggressive parameters can cause hardware stress, while conservative ones reduce performance.

In simulation, you can:

- Tune parameters interactively and observe system responses in real-time.

- Apply disturbances or sudden setpoint changes to test controller robustness.

- Visualize control variables such as error, output commands, and system states.

- Run automated test sequences to evaluate stability over many iterations.

Such systematic tuning shortens development time and reduces hardware wear.

Navigation, Mapping, and Path Planning

Testing mobile robot navigation algorithms on physical hardware is logistically challenging.

Simulation lets you:

- Create complex maps and dynamic environments with moving obstacles.

- Simulate sensor inputs for localization algorithms (e.g., SLAM).

- Test various path planning algorithms and obstacle avoidance strategies.

- Collect detailed metrics on path efficiency, collisions, and timing.

For instance, Gazebo allows simulation of robots navigating city blocks or indoor environments with pedestrians, which would be costly and time-consuming to replicate physically.

Fault Injection and Robustness Testing

Unexpected faults like sensor failures, communication loss, or power drops pose serious risks.

Simulation enables fault injection scenarios such as:

- Simulating a failed IMU or GPS sensor during operation.

- Injecting corrupted sensor data or signal delays.

- Simulating battery voltage drops or power interruptions.

- Testing emergency stop responses.

These tests help ensure your system can safely handle real-world faults.

Transitioning from Simulation to Hardware

While simulation can closely approximate reality, some factors are difficult to replicate precisely, including thermal effects, electrical noise, and material inconsistencies.

A staged approach to hardware testing is recommended:

- Begin with low-power, low-risk tests.

- Monitor electrical current, motor temperatures, and mechanical stresses.

- Implement manual overrides and emergency stops.

- Gradually increase complexity while verifying system behavior.

Simulations prepare your system, but careful hardware validation remains essential.

Conclusion

Simulation is a cornerstone of responsible and efficient robotics development. It provides a safe environment to iterate faster, catch errors early, and improve system robustness. Robots that pass extensive simulated testing have a significantly higher chance of succeeding in real-world deployment.

Before powering on your hardware, ask: Have I validated this thoroughly in simulation? Taking the extra time to simulate can save you wires, money, and frustration.

Related Posts

What is SLAM? And Why It’s the Brain of Mobile Robots

In robotics, SLAM—Simultaneous Localization and Mapping—is regarded as one of the most fundamental and complex problems. At its core, SLAM addresses a deceptively simple question: “Where am I, and what does the world around me look like?”

Read more

My RosConIN'24 (+GNOME Asia Summit) Experience

Last year, I missed ROSCon India due to exams and, honestly, had no idea what I was missing out on. This year, though, I made it, and it turned out to be more than I ever imagined. The two days I spent at ROSConIN'24 were nothing short of transformative, and this blog itself is a result of the inspiration I drew from the event.

Read more

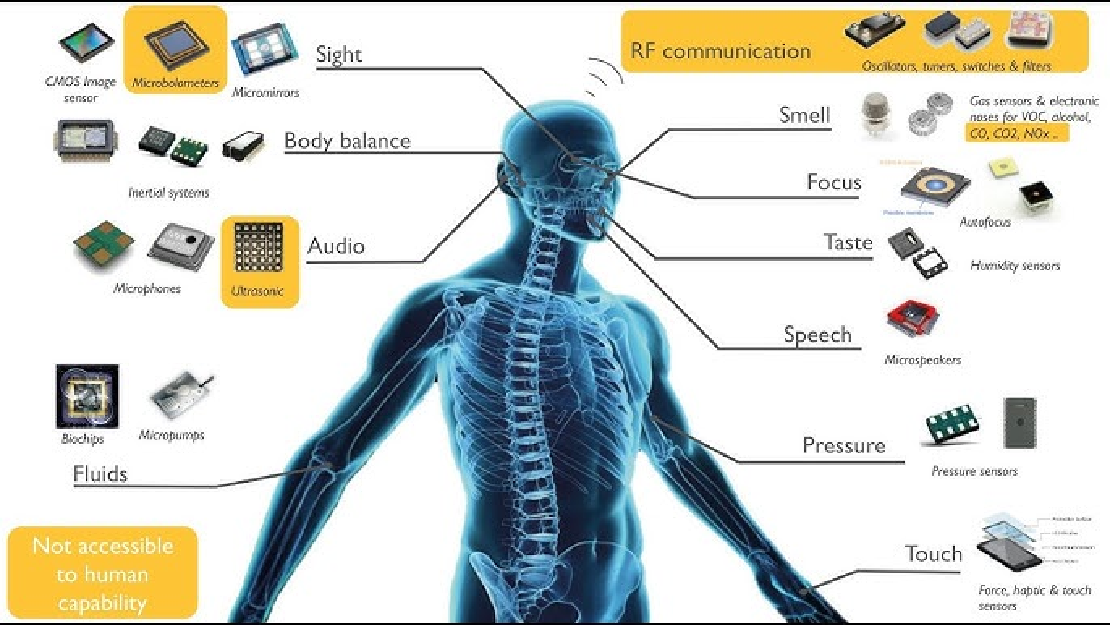

Sensors in Robotics: How Ultrasonic, LiDAR, and IMU Work

Sensors are to robots what eyes, ears, and skin are to humans—but with far fewer limits. While we rely on just five senses, robots can be equipped with many more, sensing distances, movement, vibrations, orientation, light intensity, and even chemical properties. These sensors form the bridge between the digital intelligence of a robot and the physical world it operates in.

Read more