ROS 2 vs ROS 1: What Changed and Why It Matters?

Is ROS 1 still the right choice for your next robotics project, with its well-established tools and wide community support? Or, given the growing demand for real-time performance, scalability, and modern middleware, is it finally time to make the move to ROS 2?

As of the end of this month—May 2025—official support for ROS 1 will come to a close. This marks a major turning point in the robotics software ecosystem. For over a decade, ROS 1 (Robot Operating System) has served as the backbone of robotic development across research and industry. But the shift to ROS 2 is more than just an upgrade—it’s a rethinking of the platform from the ground up.

This article provides a clear look at the changes introduced in ROS 2, why ROS 1 is being sunset, and what this means for developers, researchers, and organizations working in robotics.

What is ROS?

ROS (Robot Operating System) is a flexible framework for writing robot software. Despite the name, it’s not a full-fledged OS but a middleware layer that sits on top of your actual operating system, handling the complexity of communication, modularity, and distributed execution.

At its core, ROS provides:

- A message-passing architecture for processes (nodes) to communicate.

- Tools to visualize data, record logs, and debug system behavior.

- Packages to handle everything from perception and motion planning to hardware abstraction.

It’s the glue that connects sensors, control logic, actuators, and high-level decision-making in a robotics application. In ROS, each node performs a specific task—say, reading from a LIDAR sensor or planning a path—and publishes/subscribes to messages over topics. This enables a clean, modular architecture where components are reusable and decoupled.

Why ROS? What Difference Does It Make?

Building robot software is not like writing a web app or scripting a microcontroller. Robots deal with real-world inputs—noisy data, real-time constraints, hardware failures—and are inherently distributed systems. Without a framework like ROS, you’d have to write your own communication protocols, serialization formats, data pipelines, logging infrastructure, and process management tools.

ROS abstracts these repetitive tasks. It provides:

- Standardized communication: Publish/subscribe and service-based interaction.

- Sensor integration: Drivers for cameras, IMUs, GPS, and more.

- Visualization: RViz, rqt, and introspection tools.

- Hardware abstraction: URDF and ROS Control for modeling and interfacing with robot hardware.

With ROS, developers focus on algorithms and behavior, not the plumbing. It also offers a large open-source ecosystem, so you’re rarely starting from scratch. Whether you’re working on SLAM, navigation, or perception, someone’s likely already done 70% of the work.

Why ROS 1 Was Right (and Also Wrong)

ROS 1 got a lot of things right. It gave researchers a shared language, simplified complex system integration, and accelerated robotics development. But its architecture was never built with real-time guarantees, security, or modern networking in mind.

What ROS 1 did well:

- Made robotics accessible and modular.

- Encouraged rapid prototyping and experimentation.

- Built a massive ecosystem and developer base.

- Created tools like

rosbag,RViz, andrqtthat became industry standards.

Where ROS 1 struggled:

- Communication relied on a centralized ROS Master node, a single point of failure.

- No support for real-time scheduling or deterministic behavior.

- Lacked Quality of Service (QoS) configuration for topics.

- Poor multi-robot support—nodes weren’t namespace-aware by default.

- No native support for embedded devices or resource-constrained platforms.

- No built-in security model—data over the network was unencrypted and unauthenticated.

These issues became roadblocks as robotics moved out of the lab and into industrial, consumer, and mission-critical environments.

What Changed? Why ROS 2 is Better

ROS 2 was designed to address the structural limitations of ROS 1. It’s a complete architectural rewrite based on modern software engineering practices and real-world deployment needs.

Here’s a deeper look at what’s new:

DDS-Based Communication (No More ROS Master)

ROS 2 uses the Data Distribution Service (DDS) protocol for inter-process communication. DDS is decentralized, so there’s no need for a master node. Nodes can come and go freely, discover each other dynamically, and communicate peer-to-peer.

This enables:

- Distributed multi-robot systems with zero configuration.

- Fault tolerance—if a node dies, the system doesn’t collapse.

- Scalability across networks, platforms, and locations.

Real-Time Support

Unlike ROS 1, ROS 2 supports real-time execution. You can now:

- Run control loops with deterministic timing.

- Assign thread priorities and memory bounds.

- Interface directly with real-time operating systems (RTOS).

This makes ROS 2 viable for hard real-time applications like industrial robot arms, autonomous vehicles, and flight controllers.

Quality of Service (QoS)

DDS allows developers to fine-tune how messages are delivered with QoS profiles. You can configure:

- Reliability (best effort vs guaranteed delivery)

- Durability (do new subscribers get old messages?)

- Latency budgets

- Liveliness detection (detecting dead publishers)

This gives developers precise control over how nodes interact, especially in lossy or high-throughput networks.

Security Built-In

Security was an afterthought in ROS 1. In ROS 2, it’s a first-class citizen. It includes:

- Encryption of messages in transit

- Authentication of nodes

- Access control policies to restrict who can publish/subscribe

Security is crucial for commercial robotics, especially in fields like healthcare, defense, and autonomous transport.

Improved Node Lifecycle Management

ROS 2 introduces managed lifecycles for nodes. A node can be explicitly initialized, activated, deactivated, and shut down. This allows:

- Cleaner startup and shutdown sequences

- Better error handling

- Easier introspection and supervision

This is particularly useful in systems that require staged initialization or watchdog monitoring.

Cross-Platform & Embedded Ready

ROS 2 is designed to work across platforms:

- Native support for Linux, Windows, and macOS (though Windows and macOS are not considered production-grade for most robotics deployments)

- Builds on ARM and embedded Linux devices

- Supports cross-compilation for real-time microcontrollers

This means you can build a system with a Jetson for perception, a Raspberry Pi for control, and a desktop for planning—ROS 2 will tie it all together.

ROS 1 Doesn’t Stop Overnight

The end of official support doesn’t mean that ROS 1 systems will suddenly break. Existing applications and research projects built on ROS 1 will continue to run. But the risks will grow over time:

- No new bug fixes or security patches

- Incompatibility with newer operating systems and compilers

- Reduced community activity and package maintenance

- No support for evolving hardware or standards

For small-scale projects, this may not matter. But for production environments, long-term maintenance, and scalable deployments—ROS 2 is the way forward.

Final Thoughts

ROS 2 isn’t just a newer version of ROS—it’s a complete rethink, engineered for where robotics is headed. Real-time control, secure communication, decentralized systems, and embedded readiness are no longer optional—they’re essential.

Yes, migrating to ROS 2 can be a non-trivial effort. APIs are different. Not all ROS 1 packages have 1-to-1 equivalents. But the foundational improvements are worth it—and necessary.

If you’re starting a new robotics project in 2025, choosing ROS 1 now would be like choosing Python 2 for a new web app. It might work, but you’re building on borrowed time.

So—what changed? Everything. And that’s why it matters.

Related Posts

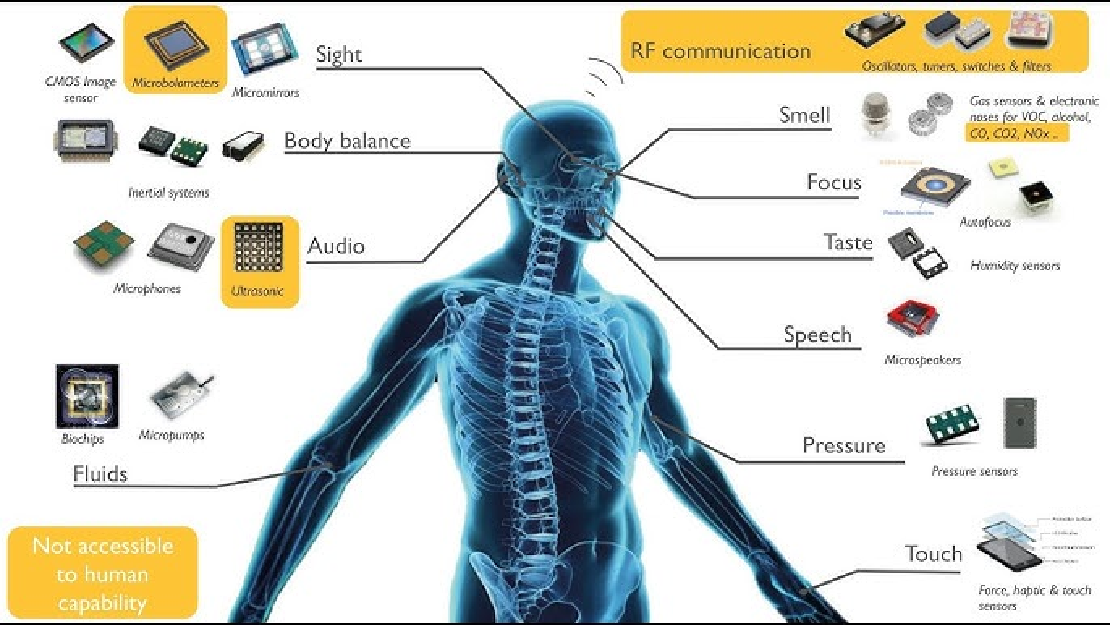

Sensors in Robotics: How Ultrasonic, LiDAR, and IMU Work

Sensors are to robots what eyes, ears, and skin are to humans—but with far fewer limits. While we rely on just five senses, robots can be equipped with many more, sensing distances, movement, vibrations, orientation, light intensity, and even chemical properties. These sensors form the bridge between the digital intelligence of a robot and the physical world it operates in.

Read more

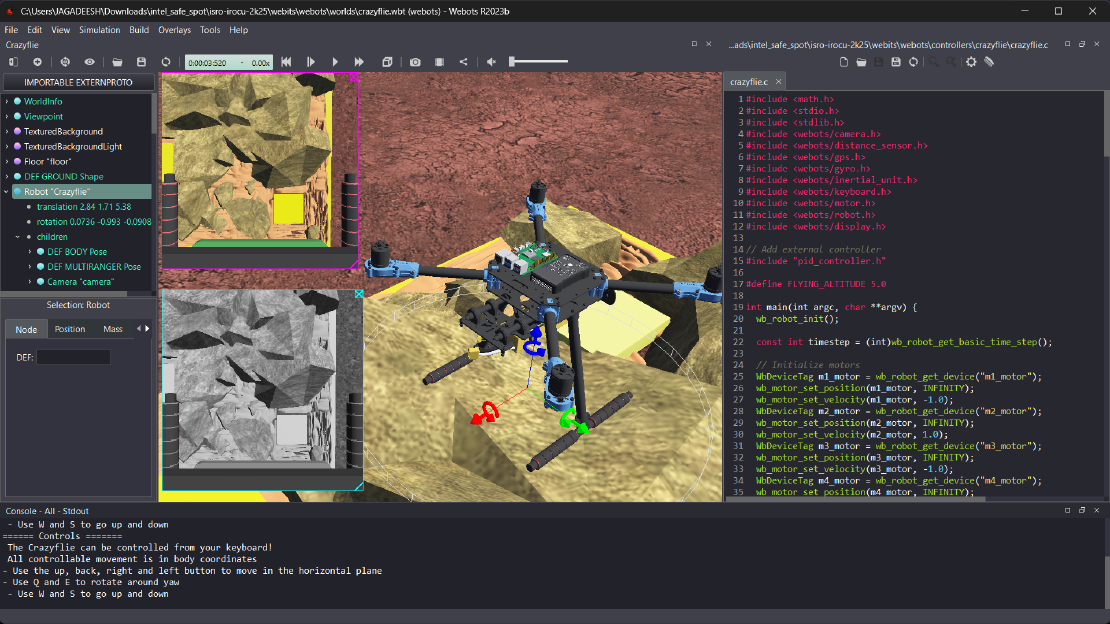

Debugging a Robot In Simulation Before You Burn Wires

Hardware does not come with an undo button. Once you power it on, mistakes—from reversed wiring to faulty code—can result in costly damage. Motors may overheat, printed circuit boards (PCBs) can be fried, and sensors may break. These issues turn exciting projects into frustrating repair sessions. The autonomous drone shown above, designed for GNSS-denied environments in webots as part of the ISRO Robotics Challenge, is a perfect example—where careful planning, testing, and hardware safety were critical at every step

Read more

Computer Vision vs. Sensor Fusion: Who Wins the Self-Driving Car Race?

Tesla’s bold claim that “humans drive with eyes and a brain, so our cars will too” sparked one of the most polarizing debates in autonomous vehicle (AV) technology: Can vision-only systems truly compete with—or even outperform—multi-sensor fusion architectures?

Read more