NeVer: NEural NEtwork in VERilog

Do Checkout Main Project: ImProVe: IMage PROcessing using VErilog

| Name | NeVer |

|---|---|

| Description | NeVer implements a neural network in Verilog for better hardware acceleration of image processing tasks |

| Start | 28 Feb 2025 |

| Repository | NeVer🔗 |

| Type | Individual |

| Level | Beginner |

| Skills | Image Processing, HDL, Computer Vision, Programming, ML |

| Tools Used | Verilog, Icarus, Perl, TCL, Quartus, C++, Python, NumPy |

| Current Status | Ongoing (Active) |

| Progress | - Implemented detection of MNIST digits (0-9) - Added support for EMNIST, enabling classification of 62 character classes - Integrated real-time inference with a Tkinter-based character drawing interface, achieving approx 1.5s latency per prediction in simulation |

| Next Steps | - Ensure the top module is synthesizable - Optimize the design for parallel processing, leveraging Multiply-Accumulate (MAC) operations - Enhance floating-point multiplication and division support for improved computational efficiency |

Project Overview

This project presents a novel, fully hardware-based neural network inference pipeline in Verilog, targeting the 62-class EMNIST dataset (digits + upper/lowercase letters). It includes two separate implementations: one using IEEE 754 single-precision floating-point arithmetic, and another using 64-bit fixed-point arithmetic. Both versions are designed without relying on external IP cores and are built entirely using free and open-source tools.

All core operations — matrix multiplication, bias addition, ReLU activation, and softmax — are implemented from scratch in Verilog. C++/Python is used only for generating memory modules, while TCL and Perl scripts automate simulation workflows, memory regeneration, and output logging.

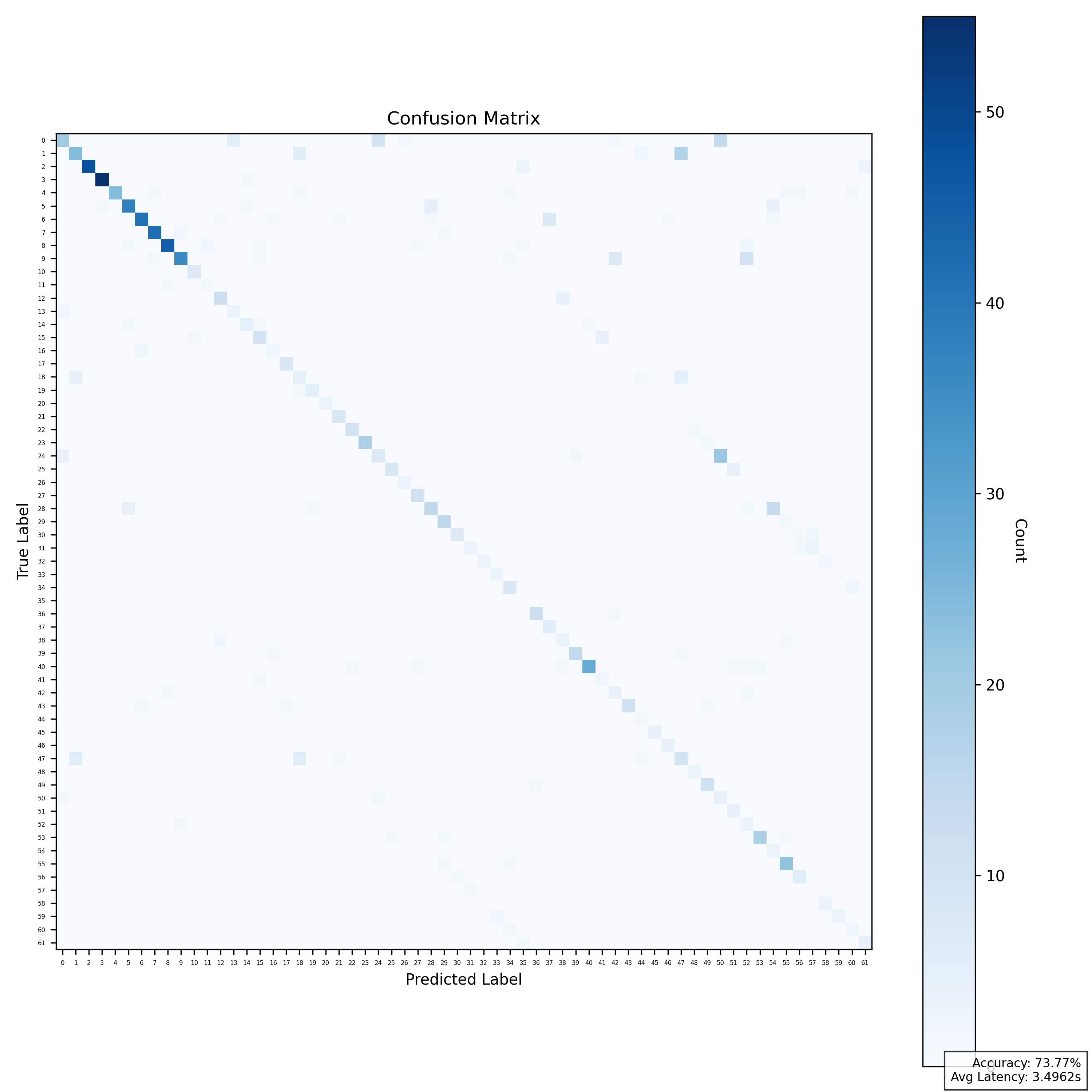

Confusion matrix for 1000 random samples

Focusing more on inference and preprocessing than training, this open-source setup achieves >90% training accuracy (>70% dev acc) and >75% Verilog-level classification accuracy, with average image inference latency ~1.5s.

The aim is to hardware accelerate neural network inference and image processing tasks by leveraging systolic arrays for efficient multiply-accumulate (MAC) operations, enabling high-throughput and low-latency execution fully in hardware

This modular architecture can be extended to larger image classification datasets. A slight drop in accuracy is expected due to the FCNN structure and use of fixed-point arithmetic over CNN and floating-point alternatives

Currently, the Neural Network is implemented as a 64-bit design with minimal parallelism, prioritizing correctness and resource efficiency. This design utilizes a Fully Convolutional Neural Network (FCNN) architecture rather than a traditional CNN, thereby avoiding complex layers such as Batch Normalization and other mathematically intensive operations that are difficult to implement efficiently in hardware. As a trade-off, the design settles for relatively low inference accuracy to maintain full synthesizability and meet hardware constraints. Ongoing work focuses on improving both accuracy and latency within these limits, with the ultimate goal of delivering a fully functional implementation on FPGA

| Sample Size | Floating-Point (IEEE 754) | Fixed-Point (64-bit) |

|---|---|---|

| 10 samples | 9 correct (90% accuracy) | 8 correct (80% accuracy) |

| 100 samples | 76 correct (76% accuracy) | 64 correct (64% accuracy) |

| 1000 samples | 737 correct (73.7% accuracy) | 658 correct (65.8% accuracy) |

Fixed Point Structure

Feel free to refer to this for more details…

NOTE:

I highly recommend checking out the main project, as this is just a subset. The main project focuses on image processing algorithms, and working on ImProVe (IMage PROcessing using VErilog) has made the learning curve for this project much easier.

NeVer (Neural Network in Verilog) is a key subproject within the broader ImProVe initiative. This work focuses on the design and implementation of a fully functional neural network entirely in Verilog, with the objective of optimizing it for efficient hardware acceleration.

Please note that the names of these projects are not meant to be taken too seriously. The names like ImProVe or NeVer may not fully reflect their functions – ImProVe doesn’t actually improve images, but processes them, and NeVer isn’t about something “never-implemented” – many have done it before. The names just make it easier for me to organize folders and code

Motivation

I was inspired by this video: Building a neural network FROM SCRATCH (no TensorFlow/PyTorch, just NumPy & math) by Samson Zhang. The video demonstrates a simple 2 layer neural network for recognizing MNIST digits (0-9)

I expanded on this by:

- Using two hidden layers instead of none

- Implementing Adam optimizer along with vanilla SGD, replacing basic gradient descent

- Extending support for 62 classes (0-9, A-Z, a-z)

- Using Verilog for inference instead of C++/Python

- Incorporating Tkinter for manually inputting handwritten characters irl, allowing direct interaction with the trained model

Project Roadmap

- Train a model using TensorFlow/PyTorch for quick validation → Infer in C++/Python

- Train using NumPy only → Infer in C++/Python

- Train using NumPy only → Infer in Verilog

- Train using pure Python (no NumPy, user-defined functions) → Infer in Verilog

- Implement a single neuron in Verilog for training

- Train directly in Verilog → Infer in Verilog

- Optimize the implementation using parallel processing, MAC units, etc. (systolic arrays)

- Make the entire Verilog implementation synthesizable

Current Status

- Successfully performed inference in Verilog using parameters trained in Python (Colab) with NumPy , achieving >90% accuracy (>70% dev acc) on the test dataset

- Achieved approximately 1.5s of inference latency in Verilog simulation.

- Implemented inference for both MNIST (digits 0-9) and EMNIST (62 classes: 0-9, A-Z, a-z)

- Training with 2000 iterations:

- First 1500 iterations: Adam optimizer

- Last 500 iterations: Vanilla SGD (Reason: Adam converges faster initially, but switching to SGD helps refine convergence | Later switched to “SGD with Momentum”)

- Using Tkinter for drawing input characters, which are then processed and fed into Verilog for inference

- Achieved <5s inference latency per prediction in simulation with FSM-driven layer evaluation

- Actively working on image reconstruction from NumPy-trained model parameters

- Replacing

real(IEEE 754) in Verilog top module with fixed-point formats like Q32.32, Q32.16, Q24.8 using sfixed from IEEE 1076.3 (ieee.fixed_pkg)- Aiming for better synthesis compatibility, but currently observing accuracy loss due to quantization

- Actively tuning fixed-point precision to balance accuracy and hardware performance

- Exploring Quantization Aware Training (QAT) to precondition the model for quantization effects during training and recover lost accuracy

The

realdata type has been replaced with a fixed-point representation for all variables that used it. There’s a moderate decrease in accuracy—approximately a 10-14% drop observed on 100 random samples—compared to the version usingreal. I’m currently working on reducing this loss

Current Workflow

- Image Processing:

draw.py→ Converts the Tkinter drawing intodrawing.jpg - Grayscale Image Conversion:

img2bin.py→ Generatesmnist_single_no.txt(integer values from 0-255) - Vectorization:

arr2row.v→ Flattens the 2D 28×28 matrix intoinput_vector.txtafter preprocessing - Memory Preloading:

memloader_from_inp_vec.py→ Convertsinput_vector.txtinto Verilog memory (image_memory.v) - Weight & Bias Preloading:

wtbs_loader.py→ Converts pretrained weight & bias TXT files into Verilog memory (W1_memory.v,b1_memory.v, etc.) - Inference in Verilog:

emnist_with_tb.v→ Top module:emnist.v

Folder Structure

.

├───bin/ # Intermediate image text files

│ └───*.txt

├───build/ # Auto-generated CMake build directory + Verilog simulation outputs

│ ├───*.vvp # Icarus Verilog compiled simulation files

│ └───<C++ targets> # CMake-built executables and object files

├───data/ # Stores trained model parameters and images

│ ├───biases/ # Text files containing trained bias values

│ │ └───*.txt

│ ├───weights/ # Text files containing trained weight values

│ │ └───*.txt

│ └───*.png # Image files

├───metrics-setup/ # Evaluation metrics and related resources

│ ├───results/ # Contains folders with classification reports and conf matrices

│ ├───samples/ # Image dirs in N_X_R_Y/ format (randomised samples for metrics eval)

│ └───*.py # Python Scripts to download dataset

│ └───*.tcl # TCL scripts to evaluate metrics

│ └───*.pl # Perl scripts to evaluate metrics

├───run/ # Setup and automation scripts for different platforms

│ ├───linux-or-macOs/ # Setup and automation scripts for Linux or macOS

│ ├───*.sh # Bash files

│ │ └───Makefile # Makefile for automating simulation/build steps

│ ├───perl/ # Scripts for automating simulation and clean-up (Perl)

│ │ └───*.pl

│ ├───python/ # Scripts for automating simulation and clean-up (Python)

│ │ └───*.py

│ ├───tcl/ # Scripts for automating simulation and clean-up (TCL)

│ │ └───*.tcl

│ └───windows/ # Setup and automation scripts for Windows

│ └───*.bat

├───scripts/ # Python/C++ scripts for image preprocessing

│ └───*.py

│ └───*.cpp

│ └─── CMakeLists.txt

├───sim/ # Test benches for verifying Verilog modules

│ └───*.v

└───src/ # Source Verilog code

├───fixed-point/ # Top-level modules with floating point rep (64bit)

│ └───*.v

├───image/ # Image memory modules

│ └───*.v

├───params/ # Memory modules for weights and biases

│ └───*.v

└───top/ # Top-level design module and supporting modules (IEEE754/real)

└───*.v

| Platform | Command |

|---|---|

| Windows | cd path-to-clean-dir/./run/win32/setup.bat |

| Linux/macOS | cd path-to-clean-dir/make -c ./run/linux-or-macOs/ |

| Perl (Cross-Platform) | cd path-to-clean-dir/perl ./run/perl/workflow.pl |

| Tcl (Cross-Platform) | cd path-to-clean-dir/tclsh ./run/tcl/workflow.tcl |

Py Command Workflow

cd path-to-clean-dir/ # navigate to your project directory

python ./scripts/draw.py # opens a Tkinter interface to draw an image by hand

python ./scripts/img2bin.py # converts the drawn image into a text-based binary file

iverilog -o ./build/imgvec.vvp ./src/*.v # compiles Verilog code that returns a 1D vector after all preprocessing

vvp ./build/imgvec.vvp # runs the compiled imgvec Verilog simulation

python ./scripts/wtbs_loader.py # (only needed for first build) generates weight and bias memory Verilog files

python ./scripts/memloader_from_inp_vec.py # loads the converted image vector into the image_memory module

iverilog -o build/prediction_test.vvp ./sim/*.v ./src/top/*.v ./src/image/*.v ./src/params/*.v # compiles entire prediction design

vvp ./build/prediction_test.vvp # runs the compiled prediction simulation

C++ Command Workflow

cd path-to-clean-dir/ # navigate to your project directory

cmake -S scripts -B build # configure build with CMake

cmake --build build # build all executables in build/

./build/draw # run draw executable (draw image with GUI)

./build/img2bin # convert drawn image to binary text file

iverilog -o ./build/imgvec.vvp ./src/*.v # compile Verilog to generate 1D image vector

vvp ./build/imgvec.vvp # run imgvec Verilog simulation

./build/wtbs_loader # (only needed for first build) generate weight and bias memory files

./build/memloader_from_inp_vec # load image vector into image_memory module

iverilog -o build/prediction_test.vvp ./sim/*.v ./src/top/*.v ./src/image/*.v ./src/params/*.v # compile full prediction design

vvp ./build/prediction_test.vvp # run final prediction simulation

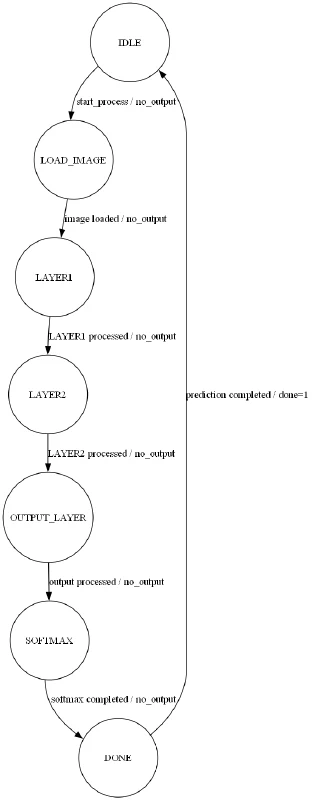

State Diagram (generated using GraphViz tool)

Memory Initialization

- Structure & Initialization: A simple

reg [WIDTH-1:0] mem [0:DEPTH-1];array whose entries are hard‑coded in aninitialblock at elaboration time, causing the synthesis tool to bake those constants into the FPGA configuration bitstream citeturn0search6. - Run‑Time Behavior: Acts as a pure, read‑only memory (ROM)—you present an address, and on the next clock (or combinationally, depending on inference) you get the stored value. No write ports or file‑I/O occur after configuration citeturn1search1.

- Implementation Styles:

- Small arrays (tens to low hundreds of bits) typically map to distributed LUT‑RAM.

- Larger arrays (kilobits) are inferred as dedicated block RAM macros.

- Why This Pattern: Embedding all data directly in RTL avoids reliance on external files and runtime system tasks like

$readmemh,$fopenor custom parsers. That makes simulation setups simpler (no file‑path issues), keeps testbenches file‑agnostic, and ensures cross‑tool portability and deterministic initialization.

Technical Details

- The top module (

emnist.v) follows an FSM-based approach with minimal to no overlap - Softmax Approximation: Using Taylor series expansion for exponentiation

- Pipeline Strategy:

- Currently: Using coarse-grained pipelining, meaning major computation blocks execute sequentially with some latency

- Next Steps: Implement fine-grained pipelining, where smaller operations are parallelized for higher throughput

To-Do

- Implement LUTs for efficient exponential computation in Softmax

- Modify the top module to make it synthesizable and improve accuracy

- Enable OCR functionality to detect any character in a given image

- Optimize for parallel processing, better pipelining, and hardware acceleration

Demos

Here are some demo videos

Mnist Digit Recognition

I developed a fully connected neural network from scratch in Google Colab, avoiding frameworks like TensorFlow and Keras. Instead, I relied on NumPy for numerical operations, Pandas for data handling, and Matplotlib for visualization. The model was trained on sample_data/mnist_train_small.csv, a dataset containing flattened 784-pixel images of handwritten digits. Data preprocessing included normalizing pixel values (dividing by 255) and splitting the dataset into a training set and a development set, with the first 1000 samples reserved for validation. The dataset was shuffled before training to enhance randomness, and labels (digits 0-9) were stored separately

The network architecture consists of an input layer (784 neurons), a hidden layer (128 neurons, ReLU activation), and an output layer (10 neurons, softmax activation). Model parameters (weights and biases) were initialized randomly and updated via gradient descent over 500 iterations with a learning rate of 0.1. Training followed the standard forward propagation for computing activations and backpropagation for updating parameters. Accuracy was recorded every 10 iterations. To ensure compatibility with Verilog, all weights and biases were scaled by 2^13 and stored as integer values in text files (

W1.txt,b1.txt, etc.), eliminating the need for floating-point operations in hardware. These trained parameters were later used for inference on new images, verifying accuracy on the development set before deployment in Verilog for real-time classificationThe trained model, based on sample_data/mnist_train_small.csv, achieved over 90% accuracy. It generates

W1,W2,b1, andb2text files containing weight and bias values. These parameters are used in Verilog to predict digits from an input image stored ininput_vector.txt, formatted as 784 space-separated integers. The Verilog module reads this data, performs inference, and displays the predicted output using$display. The original CSV file was converted into a space-separated text format, where each row contains a digit followed by 784 pixel values (785 total). During inference, the first value (label) is discarded, ensuring the model classifies the input image without prior knowledge of its actual labelThe Verilog implementation of the neural network consists of an input layer (784 neurons), a hidden layer (128 neurons), and an output layer (10 neurons). It loads pre-trained weights and biases from

W1.txt,b1.txt,W2.txt, andb2.txt, along with an input vector frominput_vector.txt. Input values are normalized by dividing by 255.0, while weights and biases are scaled by 2^13 for fixed-point arithmetic. The hidden layer applies a fully connected transformation (W1 * input + b1) followed by ReLU activation, while the output layer computes another weighted sum (W2 * hidden + b2). Instead of applying softmax, the model identifies the predicted class by selecting the index of the highest output valueThe module ensures correct file reading before computation begins. Forward propagation is executed sequentially, with an initial delay for loading weights, biases, and input values. After processing activations in both layers, the output layer iterates through its neurons to determine the class with the highest activation. The classification result is displayed via

$display. This hardware implementation bypasses complex activation functions like softmax while maintaining classification accuracy through direct maximum-value selectionIn the latest iterations, Python/C++ scripts convert text-based weight and bias files into synthesizable Verilog memory blocks. These are stored in register modules, which are instantiated in the top-level module. The image input is handled in a similar way

Currently, the top module includes a few non-synthesizable constructs, such as

$display,$finish, and therealdatatype. These were relocated to the testbench in later versions to improve synthesizability. Additionally, I am replacingrealwith a fixed-point representation (Q24.8) to make the design fully synthesizable. Future versions will output the classification result to a seven-segment display via case statements, replacing$displayMoving forward, I am working on transitioning training from Python to Verilog, with the aim of implementing a fully synthesizable neural network for hardware-based inference. While training on FPGAs is not practical and not the primary goal of this project, inference remains the main focus. If time permits, I may also explore the training aspect further

EMNIST Character Recognition

This model is trained on the EMNIST ByClass dataset (source), which includes 62 character classes—digits (0-9), uppercase letters (A-Z), and lowercase letters (a-z). The dataset undergoes preprocessing, where it is converted into a CSV format, normalized, reduced in dimensionality, and shuffled before training to improve generalization

Neural Network Architecture

The model consists of multiple layers:

- Input Layer: 784 neurons (28×28 grayscale pixel values)

- First Hidden Layer: 256 neurons (

W1: 256×784,b1: 256×1) - Second Hidden Layer: 128 neurons (

W2: 128×256,b2: 128×1) - Output Layer: 62 neurons (

W3: 62×128,b3: 62×1)

Training Process

The network is trained using forward propagation, where activations are computed at each layer through matrix multiplications and ReLU activation functions for hidden layers. Backpropagation is used to update weights based on the gradient of the loss function. The dataset is shuffled before each epoch to prevent overfitting. The model is trained over multiple epochs using a combination of Stochastic Gradient Descent (SGD) and the Adam optimizer to improve convergence

To ensure compatibility with hardware, weights and biases are scaled by 2^13 and stored as integers in text files (W1.txt, b1.txt, etc.), since Verilog does not support floating-point arithmetic

Inference in Verilog

The inference process in Verilog follows a similar structure but accommodates additional layers and character classes. Input images are read from input_vector.txt, normalized, and processed through the neural network using preloaded weights and biases. The computation follows:

hidden1 = ReLU(W1 * input + b1)hidden2 = ReLU(W2 * hidden1 + b2)output = W3 * hidden2 + b3

The index of the maximum output value corresponds to the predicted character, which is mapped to "0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz" and displayed using $display

Pipeline and Data Flow

The following scripts handle image processing, vectorization, and memory loading for Verilog inference:

- Drawing & Image Processing:

draw.py: Creates a square canvas in Tkinter for character input- After drawing, the script grayscales, inverts, compresses the image to 28×28 resolution, and saves it as

drawing.jpg

- Data Conversion & Preprocessing:

img2bin.py: Convertsdrawing.jpginto a 28×28 grayscale pixel matrix (mnist_single_no.txt)Other Verilog Files: Flattens the 2D array into a 1D vector (784 values) and stores it ininput_vector.txtafter all preprocessing

- Memory Module Generation:

memloader_from_inp_vec.py: Convertsinput_vector.txtinto a synthesizable Verilog memory module (image_memory.v)wtbs_loader.py: ConvertsW1,W2,W3,b1,b2,b3into Verilog memory modules (W1_memory.v,b1_memory.v, etc.)

Final Hardware Implementation

All these components are instantiated in the top module (emnist_with_tb.v), along with a testbench (emnist_nn_tb.v). The system successfully predicts handwritten characters in real-time

In the demo, I tested the characters “H”, “f”, and “7”, each representing different EMNIST subclasses (uppercase letters, lowercase letters, and numbers)

Additionally, I implemented a coarse-grained pipelined fully connected neural network using a Finite State Machine (FSM), integrating a Softmax function approximation via Taylor series expansion to improve computational efficiency

Raw Demo Shots (Present)

| UpperCase Alphabet | LowerCase Alphabet | Single Digit Number |

|---|

| Actual: R | Prediction: R | Actual: i | Prediction: i | Actual: 9 | Prediction: 9 |

|---|

|  |  |

|---|

Python/C++ Based Pre-processing Workflow (Present)

USER is instructed to fill the canvas to skip ROI steps

| Original Drawing | Grayscale Image | Inverted Image | Text Matrix |

|---|---|---|---|

|  |  |  |

Verilog Based Pre-processing Workflow (Currently Working to Refine)

Exploring bus interconnection options for automated workflow

| Original | Downscaled (28×28) | Grayscale | Contrasted |

|---|---|---|---|

|  |  |  |

| Edge Detection | Bounding Box | Box Overlayed | Region of Interest |

|---|---|---|---|

|  |  |  |

| ROI Zoom to Fit | ROI Resized 28x28 | ROI Padded | Inverted |

|---|---|---|---|

|  |  |  |

| Mirrored (Vertical Axis) | Rotated 90° CCW |

|---|---|

|  |

| File | What it Does | Why It’s Done | Output |

|---|---|---|---|

draw.py | User draws a character | Generates the initial input | drawing.png |

img2rgb.py | Splits image into R, G, B channels | Needed for hardware-friendly memory modules | r_memory.v, g_memory.v, b_memory.v |

downscale.v | Downscales 600×600 image to 28×28 | Inference work on 28×28 inputs | r_memory_28.v, g_memory_28.v, b_memory_28.v |

grayscale.v | Converts RGB to grayscale | Removes color bias, simplifies processing | gray_28.png |

contrast.v | Enhances contrast | Makes strokes stand out better | grayc_28.png |

prewitt.v | Applies Prewitt edge detection | Finds the outline of the character | sumapp.png |

bound.v | Detects bounding box from edge outline | Identifies Region of Interest (ROI) | bound_box.png |

boxcut.v | Applies bounding box to mask out external pixels | Isolates the character region | roi.png |

boxcutF.v | Crops the ROI and zooms to fit the canvas | Removes zero-padding, centers the content | rois.png |

resize.v | Resizes cropped ROI to 28×28 | Standardizes input size for neural network | roi_28.png |

padding.v | Adds 1-pixel padding on all sides, resizes again to 28×28 | Adds margin, keeps stroke intact | roip_28.png |

mirror.v | Mirrors image about the vertical axis | Matches EMNIST character orientation | roipim_28.png |

rotate.v | Rotates image 90° counter-clockwise | Final transformation to match EMNIST format | roipimr_28.png |

mat2row.v | Converts 28x28 matrix to 784 length vector | To vectorise the input | input_vector.txt |

UPDATED WORKFLOW

Step 1: Drawing

- draw.py → drawing.png

Step 2: Image Preprocessing

- img2rgb.py → r_memory.v, g_memory.v, b_memory.v

- downscale.v → r_memory_28.v, g_memory_28.v, b_memory_28.v

- grayscale.v → gray_28.png

- contrast.v → grayc_28.png

- prewitt.v → edge outline (sumapp.png)

- bound.v → bounding box (bound_box.png)

- boxcut.v → masked ROI (roi.png)

- boxcutF → cropped, zoomed ROI (rois.png)

- resize.v → resize to 28x28 (roi_28.png)

- padding.v → padded and resized 28x28 (roip_28.png)

- mirror.v → mirror about vertical axis (roipim_28.png)

- rotate.v → rotate 90° CCW (roipimr_28.png)

- mat2row.v → vectorized input (input_vector.txt)

Step 3: Memory Loading

- memloader_from_inp_vec.py → image_memory.v

- wtbs_loader.py → W1_memory.v, W2_memory.v, W3_memory.v

- wtbs_loader.py → b1_memory.v, b2_memory.v, b3_memory.v

Step 4: Neural Network Inference (Verilog)

- emnist_nn.v → Top module

- Instantiates relu.v, softmax.v

- Instantiates W1/W2/W3_memory.v and b1/b2/b3_memory.v

- Instantiates image_memory.v

- emnist_nn_tb.v → Testbench

- Loads memories

- Triggers inference

- Displays prediction

Step 5: Output

- Final predicted digit/character from Verilog simulation

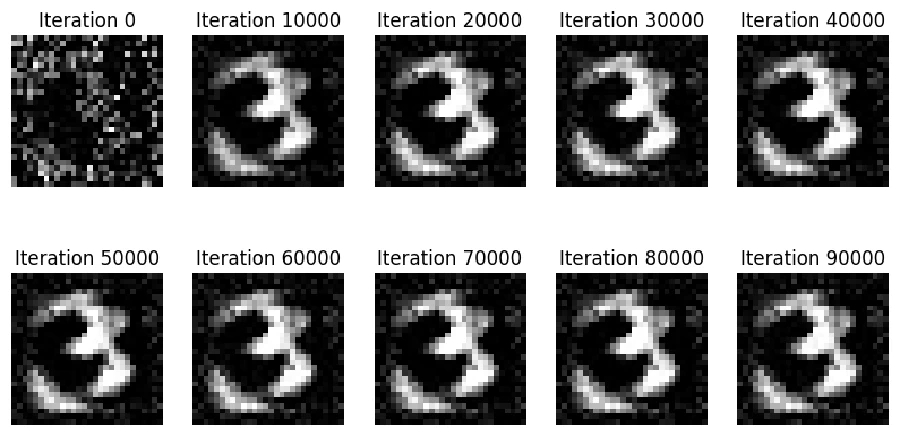

Image Reconstruction Trials

Image reconstruction works by taking random noise as the initial seed image and passing it through layers formed with trained parameters, iteratively minimizing the loss. This way, we gradually obtain the average image for each digit. Currently, I’m working on refining the process in Python with MNIST data, which will eventually be translated into Verilog. The process is taking a significant amount of time per image (even in Python), so you can imagine the challenge in Verilog. I’m still testing the Verilog implementation and aiming to optimize it to achieve better speed performance compared to the Python version.

I’m fully aware that generating images on edge devices—especially using parameters from a trained model (not even GANs)—doesn’t quite align with the main objective of this project, mainly due to its limited real-world relevance. Still, I’m pursuing it out of curiosity and for experimental purposes. The idea of creating an image using Verilog is highly conceptual, but it’s a challenge I’m enjoying exploring—just for the sake of it. :)